Reinforcement Learning and Its Challenges in Large Language Models

Reinforcement Learning (RL) plays a crucial role in scaling Large Language Models (LLMs), enabling them to solve complex tasks such as competitive-level math problems and programming through deeper reasoning. These capabilities are essential for modern advancements in AI. However, achieving stability and reliability in training dynamics when scaling RL with larger computational resources is a fundamental challenge. Gigantic language models, due to their inherent complexity and massive data volumes, require novel and stable approaches in the learning process.

Current advanced algorithms, such as GRPO, struggle with serious stability issues during the training of colossal language models, often leading to catastrophic and irreversible failures. These instabilities arise from the improper application of Importance Sampling weighting, which introduces high-variance noise. This noise accumulates as responses lengthen and is exacerbated by clipping mechanisms. This issue not only leads to model collapse but also hinders the full advancement and utilization of LLMs’ potential.

Model collapse refers to a state where the model loses its performance due to excessive noise or inconsistent objectives, and can no longer correctly perform assigned tasks. This implies a waste of computational resources and significant time spent on training the model. Consequently, there is a strong need for algorithms that can effectively manage this noise and ensure training stability.

Limitations of Existing Approaches and the Need for Innovation

Existing methods like PPO and GRPO rely on mechanisms such as clipping to address the challenges of off-policy learning, where responses are sampled from outdated policies. These approaches face limitations due to their inconsistent objectives, especially in large models performing tasks with long responses. The clipping mechanism in these algorithms, used to limit fluctuations, can sometimes become a factor in instability itself.

Token-level Importance Sampling in GRPO introduces high-variance noise and irreversible model collapse. Attempts to recover from collapse through hyperparameter tuning or checkpoint restoration fail, indicating a fundamental design flaw. The mismatch between token-level corrections and sequence-level rewards emphasizes the need for a new approach that optimizes directly at the sequence level to ensure stability and scalability. This issue has prompted researchers to explore new solutions.

These shortcomings mean that while GRPO is efficient in some scenarios, it is not suitable for large language models that produce long and complex outputs. Small changes in token-level gradient estimation can lead to large deviations in the entire sequence, and these deviations accumulate over time, guiding the model toward an undesirable state.

Introducing Group Sequence Policy Optimization (GSPO)

Researchers from Alibaba Inc. have proposed the Group Sequence Policy Optimization (GSPO) algorithm, a reinforcement learning algorithm designed for training LLMs. GSPO’s core innovation lies in its importance ratio, which is theoretically derived from sequence likelihood and aligns with importance sampling principles. This approach provides a strong theoretical foundation for managing noise in the learning process.

Furthermore, GSPO computes normalized rewards as advantages for multiple responses to a query, promoting consistency between sequence-level rewards and optimization objectives. This consistency is key to achieving greater stability and better performance during training. By optimizing at the sequence level, GSPO avoids the problems caused by scattered and noisy token-level corrections.

Empirical evaluations show that GSPO significantly outperforms GRPO in stability, efficiency, and overall performance. By solving stability challenges in training large Mixture-of-Experts (MoE) models, GSPO eliminates the need for complex stabilization techniques. This not only simplifies the training process but also allows for the full exploitation of the capabilities of these advanced models. The results indicate that GSPO can pave the way for more robust and efficient LLMs.

Empirical Validation and Efficiency of GSPO

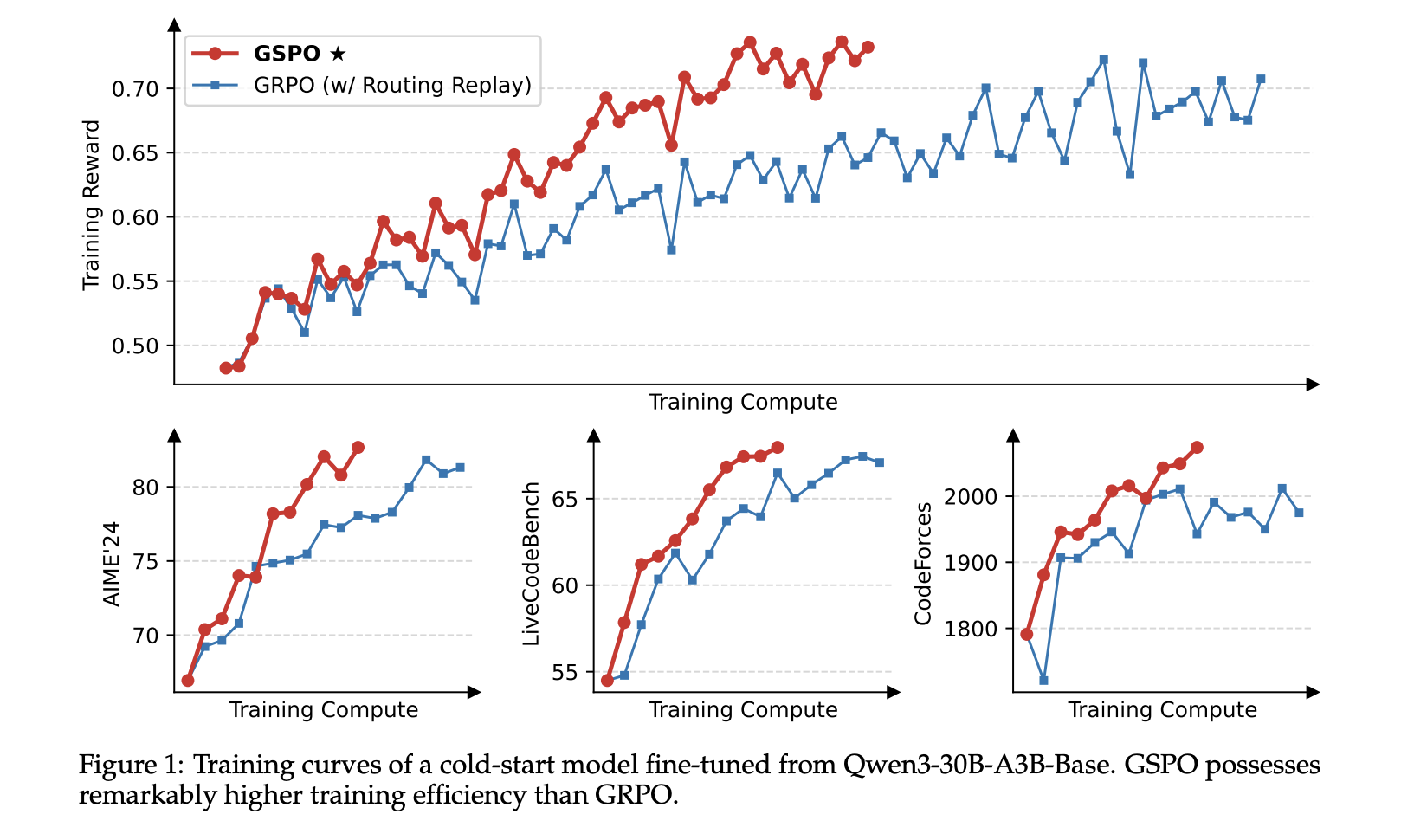

The researchers used a Cold-Start Model, optimized from Qwen3-30B-A3B-Base for their experiments. They reported training reward curves and model performance curves on AIME’24, LiveCodeBench, and CodeForces benchmarks. These benchmarks include complex mathematical and programming tasks that test the model’s ability for deep reasoning and problem-solving.

During training, the rollout data from each batch was divided into four mini-batches for gradient updates. This optimization method allows for finer tuning of weights and improves training quality. A precise approach to gradient management is crucial for maintaining stability in large models, as the smallest error can lead to significant deviations.

GSPO clips entire responses instead of individual tokens, with clipping ranges set to 3e-4 and 4e-4 in its formulation. This results in a two-order-of-magnitude difference in the ratio of clipped tokens compared to GRPO. Despite removing more tokens for gradient estimation, GSPO achieves higher training efficiency. This outcome underscores the inefficiency of GRPO’s noisy token-level estimations and indicates that the quality of clipping is more important than its quantity.

Benefits of GSPO for MoE Model Training and RL Infrastructure

GSPO offers significant advantages for training Mixture-of-Experts (MoE) models by ensuring stable expert activations during gradient updates, unlike GRPO which struggles with expert activation fluctuations. This stability allows MoE models to fully exploit their potential and simplifies the training process without needing complex solutions like Routing Replay.

In the Reinforcement Learning (RL) infrastructure, GSPO’s sequence-level optimization reduces reliance on token-level probabilities, making it more robust against precision mismatch. This capability means direct use of inference engine likelihoods is possible, avoiding costly recomputations and improving efficiency in partial rollouts and multi-turn RL.

GSPO also simplifies the RL infrastructure for training large-scale language models. This simplification leads to reduced operational complexity and increased overall system scalability. By providing a more stable and efficient platform, GSPO facilitates the development and deployment of powerful LLMs for various applications, allowing researchers to focus on innovation in other aspects of AI.

Conclusion and Future Outlook

In conclusion, researchers have introduced the Group Sequence Policy Optimization (GSPO) algorithm, a reinforcement learning algorithm for training Large Language Models (LLMs). GSPO is built upon importance sampling principles and introduces clipping, reward, and sequence-level optimization mechanisms to overcome the instability and inefficiency problems observed in the GRPO algorithm. This new approach paves the way for more stable and efficient LLM training and holds immense potential for future advancements in AI.

GSPO’s superior performance in training stability, efficiency, and scalability, especially for Mixture-of-Experts (MoE) models, underscores its importance as a robust algorithmic foundation. By using GSPO, more complex models can be trained with greater confidence, leading to an increased ability to perform challenging tasks. These advancements will yield positive results not only in research but also in practical applications.

The advancements enabled by GSPO have played a key role in the remarkable performance of Qwen3 models. These models have been able to achieve new levels of accuracy and efficiency due to the use of this innovative algorithm. Building on GSPO as a foundational approach, researchers intend to expand reinforcement learning methods, opening the door for transformative advancements in AI. The future of LLMs appears brighter than ever with algorithms like GSPO.

For further reading, please refer to the original article: https://arxiv.org/abs/2507.18071